best Ways for Web Scraping

You need to make your scraper undetectable to be able to extract data from a webpage, and the main types of techniques for that are imitating a real browser and simulating human behavior. For example, a normal user wouldn't make 100 requests to a website in one minute.To get more news about website proxy, you can visit pyproxy.com official website.

1. Set Real Request Headers

As we mentioned, your scraper activity should look as similar as possible to a regular user browsing the target website. Web browsers usually send a lot of information that HTTP clients or libraries don't.

2. Use Proxies

If your scraper makes too many requests from an IP address, websites can block that IP. In that case, you can use a proxy server with a different IP. It'll act as an intermediary between your web scraping script and the website host.

There are many types of proxies. Using a free proxy, you can start testing how to integrate proxies with your scraper or crawler. You can find one in the Free Proxy List.

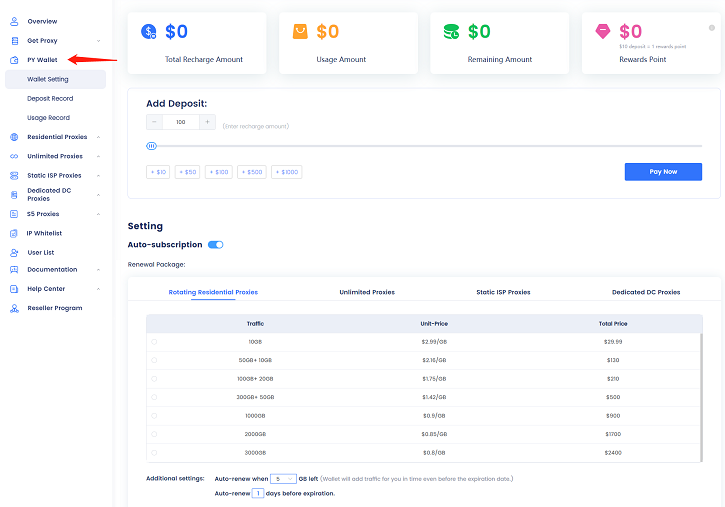

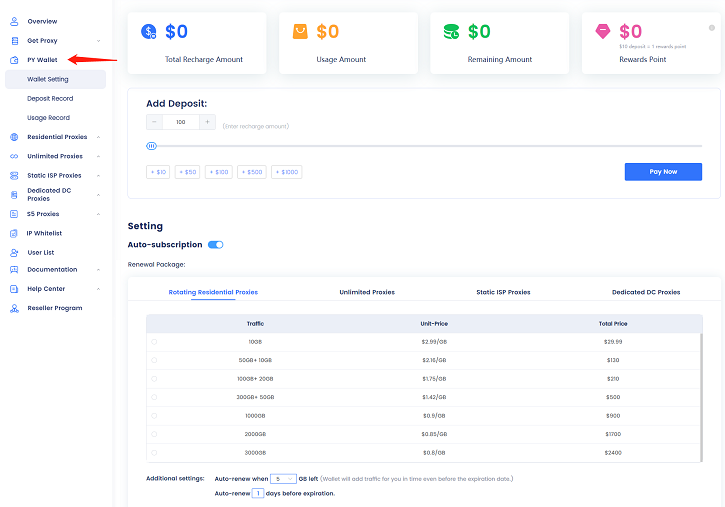

3. Use Premium Proxies for Web Scraping

High-speed and reliable proxies with residential IPs sometimes are referred to as premium proxies. For production crawlers and scrapers, it's common to use these types of proxies.

When selecting a proxy service, it's important to check that it works great for web scraping. If you pay for a high-speed, private proxy that gets its only IP blocked by your target website, you might have just drained your money down the toilet.

4. Use Headless Browsers

To avoid being blocked when web scraping, you want your interactions with the target website to look like regular users visiting the URLs. One of the best ways to achieve that is to use a headless web browser. They are real web browsers that work without a graphical user interface.

Most popular web browsers like Google Chrome and Firefox support headless mode. However, even if you use an official browser in headless mode, you need to make its behavior look real. It's common to add some special request headers to achieve that, like a User-Agent.

5. Outsmart Honeypot Traps

Some websites will set up honeypot traps. These are mechanisms designed to attract bots while being unnoticed by real users. They can confuse crawlers and scrapers by making them work with fake data.

6. Avoid Fingerprinting

If you change a lot of parameters in your requests, but your scraper is still blocked, you might've been fingerprinted. Namely, the anti-bot system uses some mechanism to identify you and block your activity.

7. Bypass Anti-bot Systems

If your target website uses Cloudflare, Akamai, or a similar anti-bot service, you probably couldn't scrape the URL because it has been blocked. Bypassing these systems is challenging but possible.

Cloudflare, for example, uses different bot-detection methods. One of their most essential tools to block bots is the "waiting room". Even if you're not a bot, you should be familiar with this type of screen:

8. Automate CAPTCHA Solving

Bypassing CAPTCHAs is among the most difficult obstacles when scraping a URL. These computer challenges are specifically made to tell humans apart from bots. Usually, they're placed in sections with sensitive information.

9. Use APIs to Your Advantage

Currently, much of the information that websites display comes from APIs. This data is difficult to scrape because it's usually requested dynamically with JavaScript after the user has executed some action.

10. Stop Repeated Failed Attempts

One of the most suspicious situations for a webmaster is to see a large number of failed requests. Initially, they may not suspect a bot is a cause and start investigating.

However, if they detect these errors because a bot is trying to scrape their data, they'll block your web scraper. That's why it's best to detect and log failed attempts and get notified when it happens to suspend scraping.

コメント

コメント:0件

コメントはまだありません